Introduction

This article explains the different caching mechanisms that are used to improve page delivery speeds. Infradox XS systems use caching of configuration settings, metadata, database data, templates and parsed page fragments.

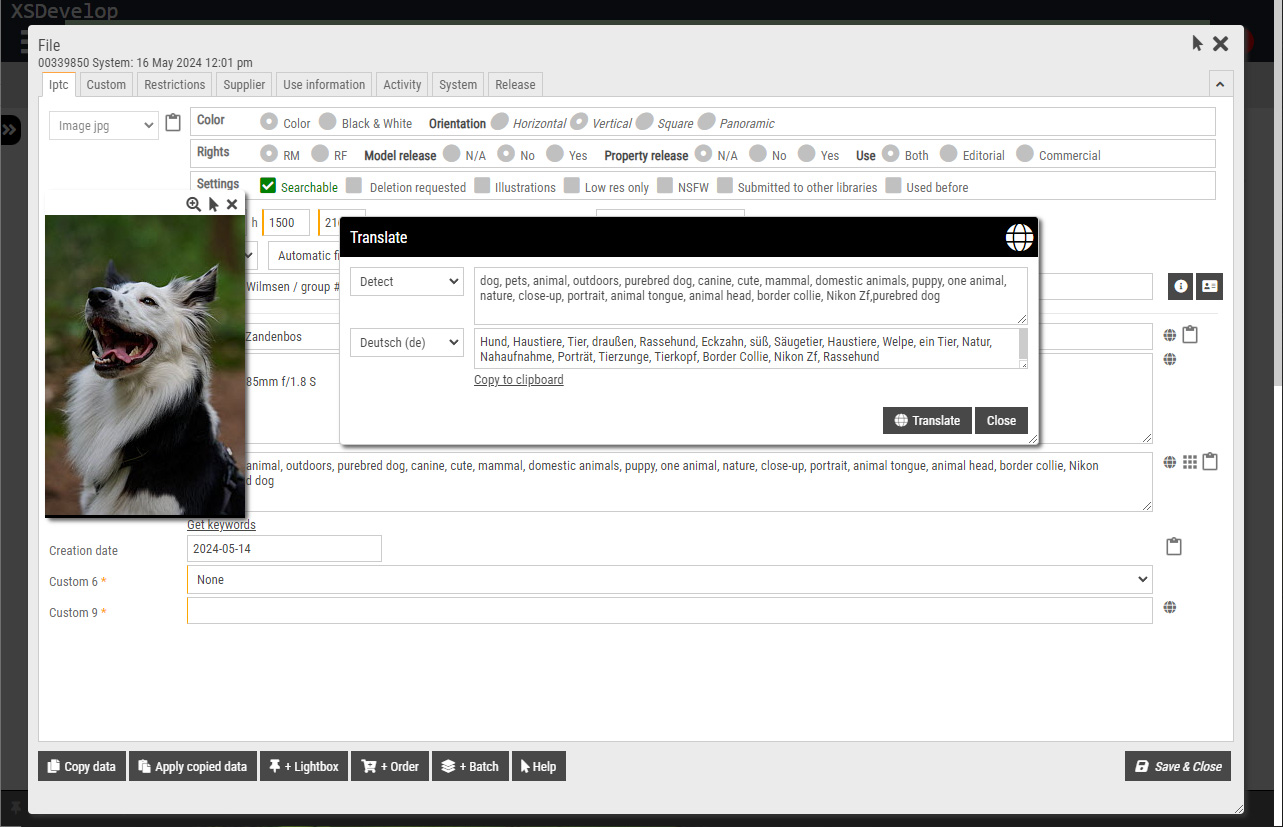

- Metadata – the data that describes your files, e.g. titles, keywords, captions and so on.

- Database data – any data stored in the database, e.g. keyword lists, page texts, restrictions linked to files, lightboxes, galleries and so on.

- Templates – every HTML page is generated dynamically by reading its base template and inserting data, inserting page fragments, removing/adding parts based on a user’s permissions and so on. The process of building a HTML page from all of this is called “parsing”.

- Page fragments – small parts that are used in the process of assembling and creating resulting HTML pages.

Caching of templates and website settings is a simple mechanism that doesn’t need a detailed explanation other than what you can read at the bottom of this article.

Having an understanding of how metadata caching and database caching works however, is important for your day-to-day website work and when configuring your Infradox website. In the first section of this article, we explain how metadata caching works (Infradox API Broker). Further down the article you’ll find a description of database caching (Infradox Data Broker) and other caching software.

Infradox API Broker / Search and metadata caching

API data

First, a primer about how the Infradox XS API broker middleware works.

Data that is required to display anything file related (e.g. search results, previews, gallery pages, the cart, lightboxes, orders and so on) is retrieved through the Infradox API Broker middleware. This software “translates” requests for each connected API (i.e. your own Infradox API and/or any 3rd party API’s), it then calls each connected API, and finally it standardises the resulting data into something your website can work with. In this context, “translation” means that every API works in its own specific way, requiring specific requests and request parameters. The API Broker middleware so to speak knows how to “talk” to each of the connected API’s. The API Broker middleware is multi-threaded and executes “API requests” on all API’s simultaneously, but it must wait for all API’s to finish until it can return the combined results to your website – where finally the HTML page is generated. An “API request” can be e.g. a search command or a command to get the metadata for a preview page and so on. Note that the API Broker works this way regardless of how many API’s are connected, even if your website uses just your own internal Infradox API. The Infradox API is connected internally and any 3rd party API’s are connected via HTTP/the internet.

If data can be read from the in-memory cache, the API Broker will not have to execute the actual API request. This is of course faster because there’s no need to establish API connections, no database access, no cache-writing and so on.

Searching and caching

When a user searches for a keyword on your website, the API Broker is invoked to execute a search request. The result is a set of file identifiers that we refer to as “keys”. These keys are then used to retrieve the data for the first page of thumbnails to display. This search request itself, with its found keys are stored in the cache. When the user returns to the search results, or when the user (or another user) executes the same search, the keys are immediately returned from the cache – without actually searching and without connecting any of the API’s. The metadata that is required to display the thumbnail pages is also stored in the cache. Whenever metadata for a thumbnail page is needed, the API Broker first checks the cache, and only if the metadata for one or more keys is not yet in the cache, will it execute the necessary API requests. For example, to display the first page of 24 thumbnails after a search for “portrait”, the data for 18 keys is found in the cache. The API Broker will execute API requests to retrieve and cache the metadata for the 6 missing keys.

Pre-caching next pages

As explained above, every time the API Broker is invoked to get the metadata for a page of thumbnails, it receives the keys of the files for which data must be retrieved (the page that the user is requesting). It also receives the keys for the next page. A call to get the data for the current page is executed immediately. And a request to get the data for the next page is posted to the data request queue. The website needs to wait for the first call (getting data for the current page) to finish, so that it can finally build and display the page. But the second call is executed in a background thread, without waiting for it to finish. So without delaying the requested page of thumbnails. As a result, when the user clicks on a button to go to the next page of thumbnails, the API Broker may be able to return all the data from the cache immediately. This obviously results in a much faster experience.

Pre-caching preview data

When the API Broker posts a next page pre-caching request to the queue, it also sends the keys of the current page. These keys are used to execute a background request for the full metadata that is required to display a preview page. Provided that the current or previous requests have already finished, the API Broker will be able to return the full metadata required for the preview page from the cache – i.e. without actually executing an API request.

Note that the metadata required to build thumbnail pages, and the full metadata for previews, are cached separately.

Automatic pre-caching

You can specify a list of keywords that you want to use to pre-cache metadata for frequently requested files. To do this, go to Site configuration, Search settings and open the section “pre-caching”.

Background searches for each of the keywords are periodically executed, and the metadata for the first 100 files (for each keyword) is retrieved and cached. The searches are also cached, however, chances are that website visitors will not be able to profit from the cached searches because of filters, territory settings and so on. I.e. a search is unique based on what was search for, but also based on the active filters, user permissions and so on. The availability of cached metadata however, will speed up page delivery times considerably. So, a search request may have to be executed again, but the metadata for the found keys may already be available in memory. You should limit the number of keywords for this function for reasons explained below.

Updating the cache

When you add new files to your database, the search cache is cleared. The metadata caches however are not. Metadata for newly added files is automatically cached once the metadata is required for a thumbnail page or preview page.

After the metadata of files is updated, the cache server will receive the keys of the changed files. These metadata of the files is removed from the caches in a background thread.

Clearing the cache

Normally, there’s no need to manually clear the API broker / metadata cache. If however there’s a problem with the cached data, you can go to Site configuration, Data Services and scroll down to “API Broker caching”. Click the button “Clear API Broker cache”. Note that this will post a flush request to the cache server and it may take several minutes before the cache is actually cleared.

Caveats and recommendations

When logged in with an administrator account, data may not always be read from the cache as additional information may be required that is not available to normal users.

The cache data can be read by many at the same time, but only one request can write to it. This means that multiple requests to update the cache may be executed at the same time, but each write request locks the cache for writing by others. Other requests will wait for a maximum of x-miliseconds until the cache can be locked for writing. And if a lock can’t be required within this time, the request is ignored (data associated with the request will not be stored).

To keep cache writing times short, it is recommended to reduce the number of thumbnails on a page. When the data for a subsequent page is requested, the pre-caching request may still be running or it may still be waiting to acquire a cache-write lock. As a result, the API Broker will execute API requests to get the data again. Not being able to acquire a cache-write lock may also occur more frequently if there are many active users.

Note that the cache can be read, even when there are other requests writing to it. This is generally speaking not a problem. The software can however be configured to make cache-read actions wait until there’s no cache-write lock.

When you use the automatic pre-caching function (explained in the previous paragraph), these requests are executed one after another to minimize the chances of not being an able to acquiring a cache-write lock in a timely fashion. But as each request retrieves and stores the metadata for 100 files (the maximum number of thumbnails that one can select on a page), such requests may still hold up other requests or prevent other requests from storing the data.

Frequent file updates may interfere with the caching mechanisms. On busy websites, it is recommended to process large updates when there’s low activity. Instead of using the batch edit function, you may consider doing off-line updates and to schedule processing of your data updates with the built-in job server function.

Infradox Data Broker / Database data and parsed fragments caching

First a primer about the process of dynamic page creation.

Every page a website visitor requests is dynamically created on the server. The process of creating such a page may be the result of many server actions. E.g. reading session data, checking permissions, reading the raw template, removing or inserting parts depending on a user’s permissions, website configuration settings and so on, getting metadata, getting database data, updating session data – and finally returning the resulting HTML to the end-user’s browser. The process of building the resulting response (usually HTML) is called “parsing”. Considering that website configuration settings will not often change, and that certain database data will not change often either, performance improvements can be achieved by storing data in memory. This reduces parsing times and reduces database retrieval requests.

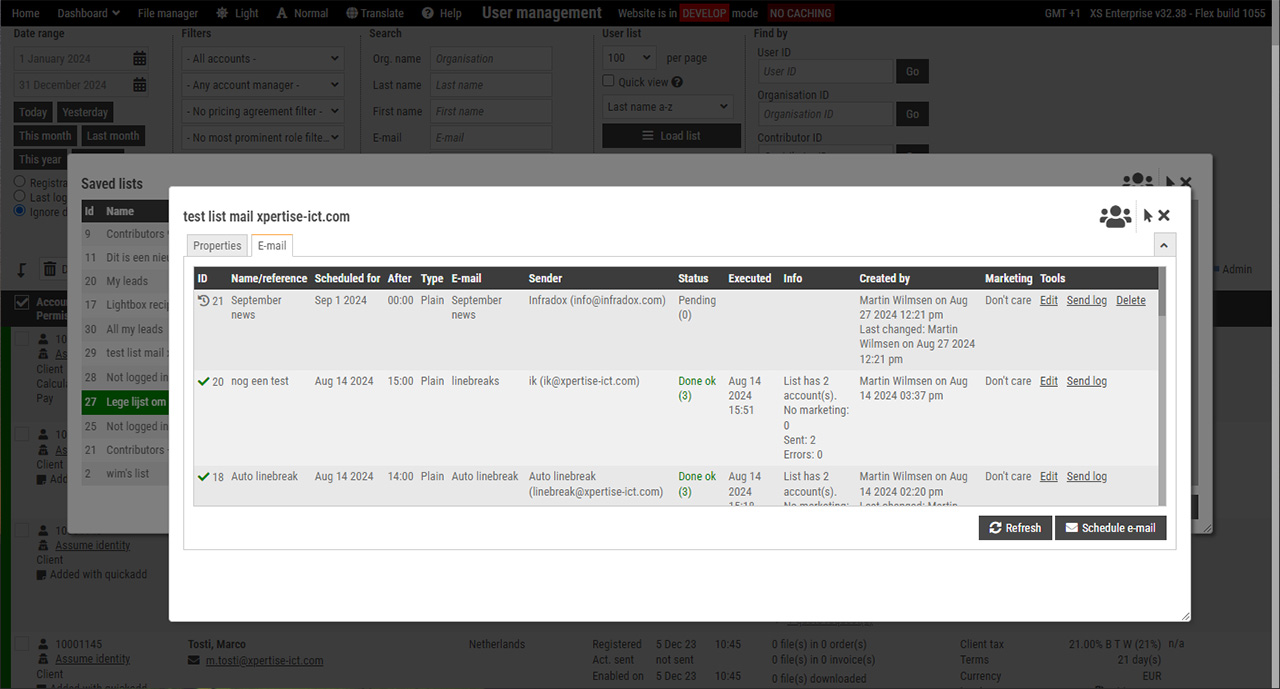

Data Broker caching

Instead of caching database data, Infradox Data Broker caches parsed page fragments – made up of HTML, already retrieved database data and so on. As a result, when a page is requested, it can be dynamically assembled from readily available cached fragments. Examples of such page fragments are the menu, the footer, the advanced search panel etc.

Viewing the Data Broker status and flushing its cache

XS backoffice has functions that let you check Data Broker cache use, and it also allows you to manually clear the cache in case there are problems (i.e. if you made changes and you don’t see your changes on the client facing pages because the old data is still taken from the cache). This is important, because if you use SQL queries in your custom pages, Data Broker doesn’t “know” it needs to remove the data from its cache after you change it.

Below is an explanation of the information that is displayed in backoffice (via Site configuration, Data services, Database cache).

- Status

Ok if the Data Broker cache is being used by your website and when it is up and running - Last initalized

Date/time of last service startup - Last garbage collect

Date/time when the service last executed its garbage collect function. This process removes old entries from the cache after such entries have not been accessed for x-minutes (setting Remove cache entries after) or after x-minutes if the entry has a fixed TTL (time-to-live). - Service interval every

The number of minutes after which the service checks if needs to run its garbage collect function. - Remove cache entries after

If x minutes have passed since the last time an entry was accessed, it will be removed by the garbage collect function. - Current memory use

Current use in Kilobytes - Maximum cache memory

The maximum memory your website is allowed to use for its cache in MB’s. - Currently cached entries

This shows the number of current entries in the cache, i.e. stored procedure calls and/or SQL queries. - Entries to never cache

This displays the number of entries that have been accessed and are marked as entries that may not be cached. - Total cache hit count

The total hit count shows how often entries are retrieved from the cache memory. - Fixed TTL entries cached

If yes, then there are entries in the cache that have a fixed time-to-live, i.e. these entries will be removed after a specific number of minutes regardless of other settings.

Suggestions server

The suggestions server is used to display suggested keywords as the end user types a few letters in the search box. It is also used by the tag cloud functions and the keyword pages. The service application caches words and associated information so that words can be retrieved from memory without having to access the database server.

Repository server

All repository settings are stored in memory and can be accessed without the need to execute database queries.

Template server

All templates, sub-templates, page fragments and so are stored in memory for instant access.

Server Configuration settings

This paragraph is for developers only. Currently use the following settings:

APIBroker settings (IAC)

- CacheMetadataMinutes 60 (anything larger than 0, leave at 60)

- AsyncCaching 0 (must be 0, wait for write-cache request to finish)

- CheckClearCacheEnabled 0 (must be 0, clearing cached entries should not be done in timer events)

- PreviewDataPreCaching 0 (must be 0)

- ReadDuringWrite 1 (1 to allow cache reads while the cache is locked for writing)

API Cache Queue server (XSAQS)

- Enabled 1

- MetadataThreading 1 (this must be 1)

XSISPA

- PreCacheGalleries 1 (1 to enable precaching of galleries, disable in case of high server load only)

- PreCacheSearches 1 (1 to enable precaching metadata for search results of predefined keywords)

- PreCacheIntervalMinutes 60 (default is 60 to delay the next cycle until 60 minutes after the last)